Previous blogs discussed the value of conducting a data quality assessment so you have fact-based information to improve your project control system. Why is conducting a schedule and cost data quality assessment important? Project managers, functional managers, upper level management, and your customer must have confidence in the data to provide relevant, timely, and actionable information for effective management visibility and control.

Project managers need credible project schedule, budget, and resource plans that accurately model how the project’s work teams intend to perform the work and measure performance. When project personnel create realistic plans factoring in likely risks, this increases the potential to meet or surpass schedule, cost, and technical objectives. All positives for the bottom line and means a happy customer.

What’s the objective for conducting a schedule and cost data quality assessment? The purpose is to gain an understanding of the data content, data integration and traceability, toolset configuration, workflow process, and project control practices that could compromise the usefulness of the data. With fact-based information from the assessment, you are in a better position to identify the root cause of the issues. You can then determine your strategy to make project control system improvements so it is easier for project personnel to create quality data from the beginning.

What’s the process?

Step 1. Determine the scope of the data quality assessment. This is dependent on your business objectives. Do you want to conduct an assessment on a single project before a scheduled customer review? Do you want to do a sampling of projects using a set of selection criteria such as the type of work, complexity, or risk as part of an overall process improvement effort? Or, do you want to make the data quality assessment a regular part of the monthly status and analysis cycle?

What data do you want to include? At a minimum, suggest reviewing the scope of work defined in the work breakdown structure (WBS) and content of the related work organization data coding structures along with the network schedule, resource requirements, and cost data. It could also include basis of estimate (BOE), risk/opportunity, subcontractor, or other source data project personnel use to produce the schedule and cost plans. Actual costs could be included to verify interfaces with business systems such as accounting. Workflow systems used for work authorization or change control could also be included.

Identify the single, authoritative source for data you want to review. Verify project personnel are not editing or modifying the source data in a secondary toolset to paint a different picture of what is occurring with a project.

Step 2. Create a repeatable process for reviewing the data. Keep it simple and easy to use. Align your process with industry standards or best practices such as the EIA-748 Standard for Earned Value Management Systems (EVMS) and the related NDIA Integrated Program Management Division (IPMD) EIA-748 Intent Guide or the NDIA IPMD Planning and Scheduling Excellence Guide (PASEG).

Consider creating a data traceability checklist as well as data quality checklists for the schedule and cost data. Include an explanation why a specific data quality check matters so you are educating project personnel as part of the process. For example, when someone sets a hard constraint date on a schedule activity, it affects the critical path method (CPM) forward and backward pass early and late date calculations. The impact? You may not be getting an accurate picture of the project’s likely completion date.

You could also use these checklists for:

- The routine monthly status and analysis cycle. This helps project personnel to identify and proactively correct data entry errors or other data issues every reporting cycle. This increases everyone’s confidence in the data.

- Conducting an internal baseline review prior to setting the performance measurement baseline (PMB) for a project. These reviews help to verify everyone involved has a shared understanding of the project’s objectives, schedule plan, budget plan, resource requirements, and associated risks/opportunities. It provides an opportunity to correct data quality issues before you set the PMB.

The data quality assessment can be a combination of manual and automated checks. There are commercial off the shelf (COTS) tools such as Deltek’s Acumen Fuse to help you analyze the construction of a network schedule developed in Microsoft Project, Oracle Primavera P6, or Deltek’s Open Plan.

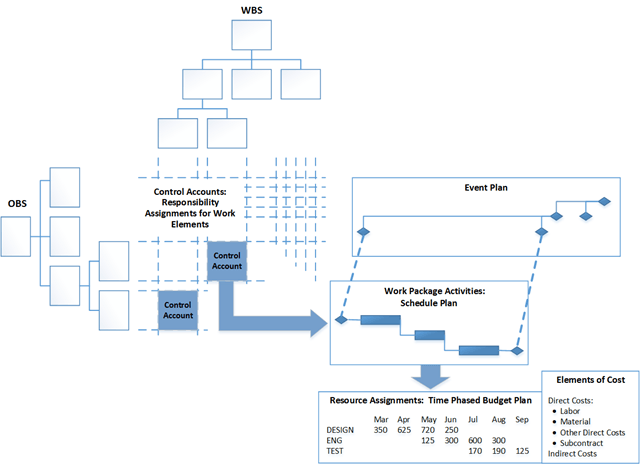

Data traceability checklist. You should be able to trace the project control data from the bottom up or top down through the various project control artifacts and tell the same story. The work organization coding is the foundation to perform the data traces. This includes the WBS with related statement of work (SOW) or deliverable requirements, project organization breakdown structure (OBS), and control accounts with related work packages or planning packages. The image below illustrates this framework.

For example, you are able to trace a WBS element down through a control account to the work package and activity level. When summing values starting at the work package level, you get the same value at the total project level for the WBS and OBS. You can confirm the control account and detailed work package/planning package time phased budget is consistent with the related resource loaded schedule activity’s start and end dates. Schedule activity status and related work package earned value assessment tell the same story. You can verify control account work authorizations are consistent with the control account scope of work, related network schedule resource loaded activities, and time phased budget. You can reconcile the accounting system actual costs with actual costs for performance reporting in the EVM cost toolset.

Schedule data quality checklist. You want to look at the content, construction, and mechanics of the network schedule to ensure it is a useful project planning, execution, and forecasting tool. The integrity of the schedule is vital because project personnel use it every day to identify what work needs to be done when as well as to identify interdependencies between work team activities.

Examples of things to check for include missing logic, summary activities that include logic ties, use of leads and lags, hard date constraints, negative float, missing code assignments, invalid dates or out of sequence status, over allocated resources, or missing baseline dates. Other data quality factors to look at include the activity descriptions. Descriptions should be unique and provide a useful, short reference about the scope of work, deliverable, or measurable result. Any level of effort (LOE) activities included in the schedule should not span the duration of the project or affect the critical path calculations. Ideally, the schedule activities are resource loaded and is the basis for producing the time phased budget and estimate to complete cost data.

Cost data quality checklist. You want to look at the results of generating the time phased distributed budget and cost estimate to complete from the schedule resource loaded activity details. Identify any disconnects or missing data that could compromise the ability to accurately determine, monitor, and control the cost to perform the work as scheduled.

Examples of things to check for include verifying the budget values starting at the total contract level less any management reserve is equal to the amount of budget allocated for the PMB. The bottom up distributed budget values at the work package/planning package level sum to the budget amount allocated to a control account that then sum to the same total amount for the PMB. Are there any missing direct costs or indirect costs in the toolset that should have been calculated? Are there any missing expected cost values for the current reporting period? For example, are there work packages with earned value but no actual costs, or actual costs with no earned value?

Step 3. Once you have identified the issues with the data, the next step is to determine the root cause. Then you can figure out what your options are to make improvements. Common root causes:

- Not creating useful data to begin with. There can be mixture of reasons this occurs. It could be project personnel don’t know what they should be doing or how to use the toolsets effectively. Perhaps they are lacking sufficient process direction or there are disconnects in the workflow. Common culprits include data coding requirements are not established or set up properly in the toolset at the beginning of a project.

- Toolset configuration hinders data integration. As noted in a previous blog, it helps to have a defined project control data architecture in place. A good understanding of your business systems as well as where source data resides is essential. How do all the puzzle pieces fit together so you can create an integrated data framework for the schedule and cost toolsets?

- Lack of process ownership. This was also mentioned in the previous blog. When process ownership is lacking, people keep pointing at someone else as the source of the problem. The result? Nothing getting resolved.

We’ll talk more about the root cause analysis and how you can solve data quality issues in the next blog on this topic.

Looking to create a data quality assessment process for your projects? PrimePM has the cross-functional resources, checklists, and tools such as Acumen Fuse to help you get started. PrimePM is a reseller of Deltek’s Project and Portfolio Management (PPM) solutions including Acumen Fuse.