When was the last time you looked at your process to generate routine monthly project status and analysis reports? Does it take days or weeks to produce contractual project performance reports for your customer? How confident are you in the data to provide timely, relevant, and useful information to make management decisions?

Data quality and integration issues can significantly increase the time and effort to produce deliverable reports because project personnel are often making ad-hoc manual data “adjustments”. Tackling the root cause of poor data quality can dramatically reduce the time spent producing required outputs and increase the usefulness of the data.

What are some indicators you may have data quality issues that are affecting the time required to produce outputs? Do project personnel routinely:

- Manually export, manipulate, and edit the source data before producing customer project performance reports?

- Fix the same schedule or cost data disconnects every month without investigating the root cause?

- Use software options to fix or set estimate to complete (ETC) data? Edit the ETC data to match a target number?

- Overwrite or edit source data coming other business systems or subcontractors?

- Fail to establish a schedule baseline or cost baseline? Changing historical budget or earned value data?

If these types of activities are occurring, those data “adjustments” are wasting time and money. It also raises the question of data reliability. Are you getting an accurate picture of what’s going on with the project? How do you know? Are you about to get an unhappy project schedule or budget “surprise”?

What if you could produce the reports in two hours instead of two days or two weeks? And, with full confidence in the data to provide the relevant information you need? What if you could reclaim all of that effort spent on data “adjustments” every month? Think of the time and cost savings you could achieve by tackling the underlying reasons it takes so many people so many hours to produce contractually required or internal management reports. It doesn’t need to be this hard.

How do you achieve that efficiency and confidence in the data quality? It takes a commitment to create a single source of data – the one source of truth, and establishing quality data from the start following a practical project control process. What do you need to have in place?

1) Project control best practices designed for project personnel. The goal is to establish a shared understanding of project control concepts and workflow procedures – who is responsible for doing what when.

So, what does “shared understanding of project control concepts” mean? Let’s take the example of the work breakdown structure (WBS). The project manager understands it is a product oriented hierarchical breakdown of the project’s requirements including deliverables and end items. The project manager uses the WBS as the coding framework for organizing the work and subdividing the entire contract work scope into discrete, definable, and logical increments or elements of work. However, the schedulers think it is just a software generated outline structure in the schedule toolset. As long as there is an outline code for each activity in the schedule, they are good – mission accomplished. Then there is the accounting person who thinks it is just a charge number they set up in the accounting system.

The understanding the scheduler or accounting person has of the WBS has zero correlation to how the project manager uses the WBS. Without a shared understanding of the purpose of the WBS and how to use it, the various functional personnel are talking past each other. The result? A data coding misconnect in different business systems or toolsets that only gets worse with time.

2) Clearly defined project control roles to identify the people who are responsible and accountable for outcomes. This is where the project control process meets reality – the day-to-day activities of completing and managing the project’s work effort. The workflow procedures identify who is solely responsible for ensuring the accuracy of the source data in the schedule toolset, cost toolset, accounting system, or other business system. When an issue surfaces, the process owner is responsible for identifying the root cause, communicating it with other project personnel, and resolving it as quickly as possible.

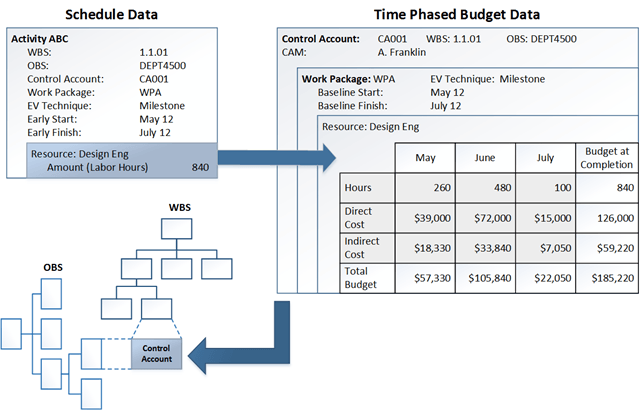

Let’s use the work organization process illustrate what happens when everyone has a common understanding of what is expected and they understand their roles and responsibilities. For this example, the project manager has the responsibility to establish their project’s WBS in accordance with the customer’s contractual requirements. They may extend the contract WBS to lower levels to establish the “just right” level of internal data detail to manage the work effort – the control account level. The project manager is also responsible for establishing the project’s organization breakdown structure (OBS) and control accounts – the intersection point between the WBS and the OBS (the person responsible for a work element).

The project manager directs the project control team to set up the WBS and other coding structures in the schedule and cost toolsets. The scheduler is responsible for assigning the approved WBS, organization structure, control account, and work package codes to the schedule activities as they are developing the network schedule. The project control analyst is responsible for executing an integration utility to create the work package time phased budget data in the cost toolset from the schedule data. As a result, the schedule and cost data tell the same story and use the same set of coding structures. The following image illustrates this integration.

The project manager also works with the accounting person to set up the charge numbers in the accounting system. This correlates to the level where the work is planned and budgeted at the work package level.

The project manager maintains configuration control of the work organization coding structures and can verify, with the help of the project control team, the source data in the schedule and cost toolsets agree. They have also worked with the accounting department so they can align the actual costs from the accounting system to the budget data in the cost toolset for performance analysis.

With a single source for accurate data, any output from the toolsets will tell the same story. Project personnel can quickly produce data views or reports using one or more of the coding structures at any level of detail. This makes it easy to drill down into the data, do root cause analysis, produce a variety of metrics, or create project dashboards. That’s where the power of the toolsets come into play – organizing or summarizing the source data in a variety of ways to proactively manage a project.

3) Defined project control data architecture. This requires a good understanding of your company’s business systems data and processes. It takes some thought to model the way you do business accurately and translate that into a data framework for the schedule and cost toolsets. Considerations include the integration points between the business systems and project control toolsets, the activity coding needed to produce schedule driven cost data, the level of resource planning, how charge numbers are set up, the level of control account and work package detail, and what outputs or views you need to produce. A well thought out data architecture ensures there is single source for the data with defined interface points. It also eliminates all manual data “adjustments”.

4) Guidance for creating and integrating data at the “just right” level of data detail needed to manage the work effort. A previous blog discussed creating a framework that project managers can use to scale project control practices to match a project’s characteristics such as the type of work, complexity, risk levels, or contractual requirements.

If you suspect you have data quality or integration issues, what’s your next step? Think about conducting a thorough data quality assessment so you have fact-based information to determine your best path forward. Creating an accurate model of business systems data and process should be the next priority – this will help you define your data architecture for the project control data.

Tackling data quality and integration issues can seem overwhelming. PrimePM can help you sort through what project personnel are currently doing, work through your business process and data requirements, and help you configure your schedule and cost toolsets. Producing data views or reports can be simple and quick when you have quality data to start with.